The issue with headline automation numbers

Automation tasks are sometimes introduced with assured figures. A manufacturing unit stories a 25 p.c productiveness enhance. An RPA deployment claims quicker processing occasions.

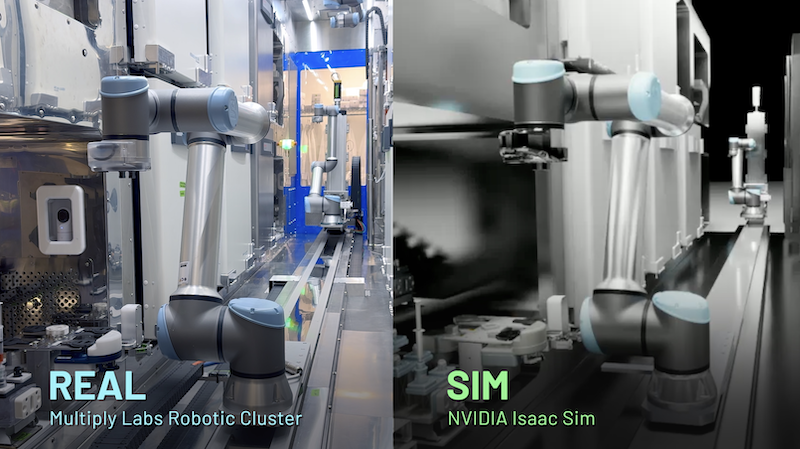

A robotics rollout is alleged to have lowered downtime considerably. These numbers sound compelling, however they are often deceptive if the comparability behind them is poorly constructed.

The problem isn’t the absence of information. It’s how that information is summarised and introduced.

Why averages can distort automation outcomes

Most before-and-after comparisons depend on a single headline metric, sometimes a mean enchancment throughout machines, processes, or departments. Whereas averages are helpful, they will masks giant variations in efficiency, that are frequent in automation environments.

Think about a manufacturing line the place ten robotic cells are upgraded. Two cells ship distinctive features as a consequence of optimum layouts and skilled operators. A number of others present modest enhancements, whereas a couple of wrestle throughout integration.

Reporting a single common enchancment suggests uniform success, despite the fact that most methods didn’t carry out at that degree. This is the reason analysts typically sanity-check reported averages utilizing instruments resembling a mean calculator to make sure the quantity displays total system behaviour fairly than a couple of standout outcomes.

When the median tells a extra sincere story

In conditions the place efficiency varies broadly, the median typically gives a clearer image of what a typical automation deployment achieves. Not like averages, medians are much less influenced by excessive values at both finish of the efficiency spectrum.

When automation is rolled out throughout a number of factories, warehouses, or departments, the median outcome can reveal whether or not most websites are seeing significant features or whether or not success is concentrated in a small subset. Reviewing outcomes with a median calculator can shortly expose whether or not headline enhancements are consultant or skewed by outliers.

Evaluating earlier than and after outcomes the correct means

One other frequent pitfall lies in how enhancements are expressed. Automation outcomes are sometimes introduced as absolute modifications with out enough context. A discount in defect charges from 4 p.c to 3 p.c could seem small, however relative to the unique baseline it represents a big enchancment.

Utilizing a percentage difference calculation helps standardise comparisons, making it simpler to evaluate modifications throughout amenities with totally different beginning factors, manufacturing volumes, or operational constraints. This method permits decision-makers to match automation outcomes extra pretty and constantly.

Timing and context matter greater than velocity

Earlier than-and-after comparisons are additionally delicate to timing. Early post-deployment information could understate long-term advantages, as methods require tuning and operators want time to adapt.

However, evaluating peak automated efficiency with long-term guide averages can exaggerate success. Significant comparisons depend upon constant timeframes and clearly outlined baselines.

Making higher selections with higher metrics

The takeaway for automation leaders is easy. Efficiency shouldn’t be lowered to a single quantity. Dependable analysis combines averages with medians, absolute modifications with relative variations, and short-term outcomes with longer-term developments.

In automation, numbers form funding selections. Decoding them rigorously is the distinction between understanding actual progress and being fooled by a handsome statistic.