The rivalry between Anthropic and OpenAI has intensified, from competing Tremendous Bowl adverts to launching new coding fashions on the identical day. Anthropic’s Claude Opus 4.6 and OpenAI’s Codex 5.3 are actually dwell. Each present sturdy benchmarks, however which one actually stands out? I’ll put them to the check and evaluate their efficiency on the identical process. Let’s see which one comes out on prime.

OpenAI Codex 5.3 vs Claude Opus 4.6: Benchmarks

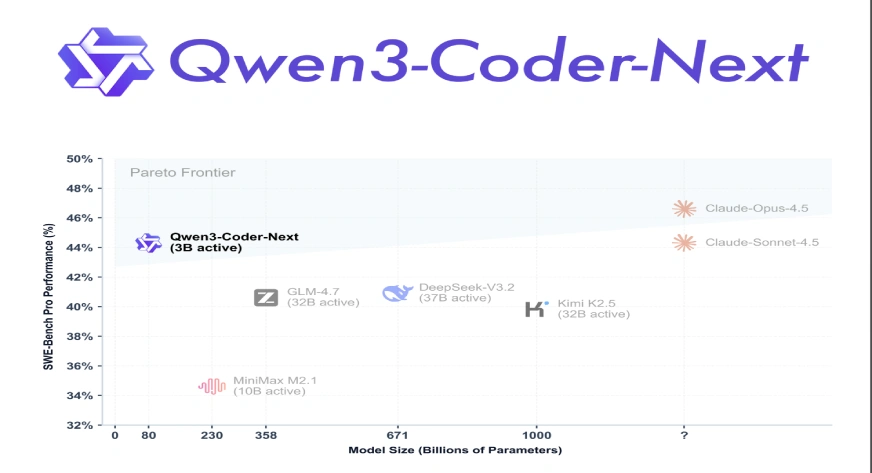

Claude 4.6 Opus scores for SWE-Bench and Cybersecurity are described as “industry-leading” or “prime of the chart” of their launch notes, with particular high-tier efficiency indicated of their system playing cards.

| Benchmark | Claude 4.6 Opus | GPT-5.3-Codex | Notes |

|---|---|---|---|

| Terminal-Bench 2.0 | 81.4% | 77.3% | Agentic terminal abilities and system duties. |

| SWE-Bench Professional | ~57%* | 56.8% | Actual-world software program engineering (multi-language). |

| GDPval-AA | Main (+144 Elo) | 70.9% (Excessive) | Skilled information work worth. |

| OSWorld-Verified | 72.7% | 64.7% | Visible desktop setting utilization. |

| Humanity’s Final Examination | First Place | N/A | Complicated multidisciplinary reasoning. |

| Context Window | 1 Million Tokens | 128k (Output) | Claude helps 1M enter / 128k output restrict. |

| Cybersecurity (CTF) | ~78%* | 77.6% | Figuring out and patching vulnerabilities. |

Claude 4.6 Opus (Anthropic):

- Focus: Distinctive at deep reasoning and long-context retrieval (1M tokens). It excels at Terminal-Bench 2.0, suggesting it’s at the moment the strongest mannequin for agentic planning and sophisticated system-level duties.

- New Options: Introduces “Adaptive Pondering” and “Context Compaction” to handle long-running duties with out dropping focus.

Right here’s our detailed evaluation on Claude Opus 4.6.

GPT-5.3-Codex (OpenAI):

- Focus: Specialised for the complete software program lifecycle and visible pc use. It exhibits a large leap in OSWorld-Verified, making it extremely efficient at navigating UI/UX to finish duties.

- New Options: Optimized for pace (25% quicker than 5.2) and “Interactive Collaboration,” permitting customers to steer the mannequin in real-time whereas it executes.

Right here’s our detailed weblog on Codex 5.3.

The right way to Entry?

- For Opus 4.6: I’ve used my Claude Professional account price $17 per 30 days.

- For Codex 5.3: I’ve used the macOS app of codex and my ChatGPT plus account (₹1,999/month) for logging-in.

Claude Opus 4.6 vs OpenAI Codex 5.3 Duties

Now that we’re performed with all the premise, let’s evaluate the efficiency of those fashions. You could find my immediate, mannequin responses and my tackle the identical:

Job 1: Twitter‑model Clone (internet app)

Immediate:

You’re an knowledgeable full‑stack engineer and product designer. Your process is to construct a easy Twitter‑model clone (internet app) utilizing dummy frontend knowledge.

Use: Subsequent.js (App Router) + React + TypeScript + Tailwind CSS. No authentication, no actual backend; simply mocked in‑reminiscence knowledge within the frontend.

Core Necessities:

- Left Sidebar: Brand, important nav (Residence, Discover, Notifications, Messages, Bookmarks, Lists, Profile, Extra), main “Publish” button.

- Middle Feed: Timeline with tweets, composer on the prime (profile avatar + “What is occurring?” enter), every tweet with avatar, identify, deal with, time, textual content, optionally available picture, and actions (Reply, Retweet, Like, View/Share).

- Proper Sidebar: Search bar, “Tendencies for you” field (subjects with tweet counts), “Who to observe” card (3 dummy profiles).

- Prime Navigation Bar: Mounted with “Residence” and a pair of tabs: “For you” and “Following”.

- Cell Conduct: On small screens, present a backside nav bar with icons as a substitute of the left sidebar.

Dummy Knowledge:

- Create TypeScript varieties for Tweet, Person, Pattern.

- Seed app with:

- 15 dummy tweets (brief/lengthy textual content, some with photos, various like/retweet/reply counts).

- 5 dummy traits (identify, class, tweet rely).

- 5 dummy customers for “Who to observe”.

Conduct:

- Publish Composer: Sort a tweet and immediately add it to the highest of the “For you” feed.

- Like Button: Toggle appreciated/unliked state and replace like rely.

- Tabs: “For you” exhibits all tweets, “Following” exhibits tweets from 2–3 particular customers.

- Search Bar: Filter traits by identify because the person varieties.

File and Part Construction:

- app/format.tsx: World format.

- app/web page.tsx: Essential feed web page.

- parts/Sidebar.tsx: Left sidebar.

- parts/Feed.tsx: Middle feed.

- parts/Tweet.tsx: Particular person tweet playing cards.

- parts/TweetComposer.tsx: Composer.

- parts/RightSidebar.tsx: Tendencies + who-to-follow.

- parts/BottomNav.tsx: Cell backside navigation.

- knowledge/knowledge.ts: Dummy knowledge and TypeScript varieties.

Use Tailwind CSS to match Twitter’s design: darkish textual content on mild background, rounded playing cards, refined dividers.

Output:

- Present a brief overview (5–7 bullet factors) of the structure and knowledge stream.

- Output all recordsdata with feedback on the prime for file paths and full, copy-paste-ready code.

- Match imports with file paths used.

Constraints:

- No backend, database, or exterior API—every part should run with

npm run dev.- Use a regular create-next-app + Tailwind setup.

- Maintain all content material dummy (no actual usernames or copyrighted content material).

The right way to Run:

After making a Subsequent.js + Tailwind venture, run the app with the precise instructions offered.

Output:

My Take:

The Twitter clone constructed by Claude was noticeably higher. Codex did handle to create a sidebar panel, but it surely had lacking photos and felt incomplete, whereas Claude’s model seemed way more polished and production-ready.

Job 2: Making a Blackjack Recreation

Immediate:

Recreation Overview:

Construct a easy, truthful 1v1 Blackjack recreation the place a human participant competes in opposition to a pc supplier, following normal on line casino guidelines. The pc ought to observe mounted supplier guidelines and never cheat or peek at hidden data.

Tech & Construction:

- Use HTML, CSS, and JavaScript solely.

- Single-page app with three recordsdata:

index.html,model.css,script.js.- No exterior libraries.

Recreation Guidelines (Commonplace Blackjack):

- Deck: 52 playing cards, 4 fits, values:

- Quantity playing cards: face worth.

- J, Q, Okay: worth 10.

- Aces: worth 1 or 11, whichever is extra favorable with out busting.

- Preliminary Deal:

- Participant: 2 playing cards face up.

- Seller: 2 playing cards, one face up, one face down.

- Participant Flip:

- Choices: “Hit” (take card) or “Stand” (finish flip).

- If the participant goes over 21, they bust and lose instantly.

- Seller Flip (Mounted Logic):

- Reveal the hidden card.

- Seller should hit till 17 or extra, and should stand at 17 or above (select “hit on mushy 17” or “stand on all 17s” and state it clearly within the UI).

- Seller doesn’t see future playing cards or override guidelines.

- Consequence:

- If the supplier busts and the participant doesn’t, the participant wins.

- If neither busts, the upper whole wins.

- Equal totals = “Push” (tie).

Equity / No Bias Necessities:

- Use a correctly shuffled deck in the beginning of every spherical (e.g., Fisher-Yates shuffle).

- The supplier should not change conduct primarily based on hidden data.

- Don’t rearrange the deck mid-round.

- Maintain all recreation logic in

script.jsfor audibility.- Show a message like: “Seller follows mounted guidelines (hits till 17, stands at 17+). No rigging.”

UI Necessities:

- Format:

- Prime: Seller part – present supplier’s playing cards and whole.

- Center: Standing textual content (e.g., “Your flip – Hit or Stand?”, “Seller is drawing…”, “You win!”, “Seller wins”, “Push”).

- Backside: Participant part – present participant’s playing cards, whole, and buttons for Hit, Stand, and New Spherical.

- Present playing cards as easy rectangles with rank and go well with (textual content solely, no photos).

- Show win/loss/tie counters.

Interactions & Move:

- When the web page masses, present a “Begin Recreation” button, then deal preliminary playing cards.

- Allow Hit/Stand buttons solely throughout the participant’s flip.

- After the participant stands or busts, run the supplier’s computerized flip step-by-step (with small timeouts).

- At spherical finish, present the result message and replace counters.

- “New Spherical” button resets fingers and reshuffles the deck.

Code Group:

- Features in

script.js:

createDeck(): Returns a recent 52-card deck.shuffleDeck(deck): Shuffles the deck (Fisher-Yates).dealInitialHands(): Offers 2 playing cards every.calculateHandTotal(hand): Handles Aces as 1 or 11 optimally.playerHit(),playerStand(),dealerTurn(),checkOutcome().- Monitor variables for

playerHand,dealerHand,deck, and win/loss/tie counters.Output Format:

- Briefly clarify in 5–7 bullet factors how equity and no bias are ensured.

- Output the complete content material for:

index.htmlmodel.cssscript.js- Make sure the code is copy-paste prepared and constant (no lacking features or variables).

- Add a “The right way to run” part: instruct to put the three recordsdata in a folder and open

index.htmlin a browser.

Output:

My Take:

The hole turned much more apparent within the Blackjack recreation. Codex 5.3 produced a really boring, static output. In distinction, Claude Opus 4.6 was method forward. It delivered a correct inexperienced on line casino mat, a way more enticing UI, and an general partaking internet expertise.

Claude Opus 4.6 vs OpenAI Codex 5.3: Closing Verdict

Opinions on whether or not Codex 5.3 or Opus 4.6 is best stay divided within the tech group. Codex 5.3 is favored for its pace, reliability in producing bug-free code, and effectiveness in complicated engineering duties, notably for backend fixes and autonomous execution. Alternatively, Opus 4.6 excels in deeper reasoning, agentic capabilities, and dealing with long-context issues, providing extra enticing UI designs. Nevertheless, it could possibly face challenges with iterations and token effectivity.

After my hands-on expertise with each fashions, for this battle, Codex 5.3 vs Claude Opus 4.6, I’m going with Claude Opus 4.6 🏆.

The general efficiency, ease of use, and polished UI made it stand out within the duties I examined, although Codex 5.3 had its deserves in pace and performance.

Don’t simply take my phrase for it. Put each fashions to the check your self and see which one works greatest for you! Let me know your ideas.

Login to proceed studying and revel in expert-curated content material.