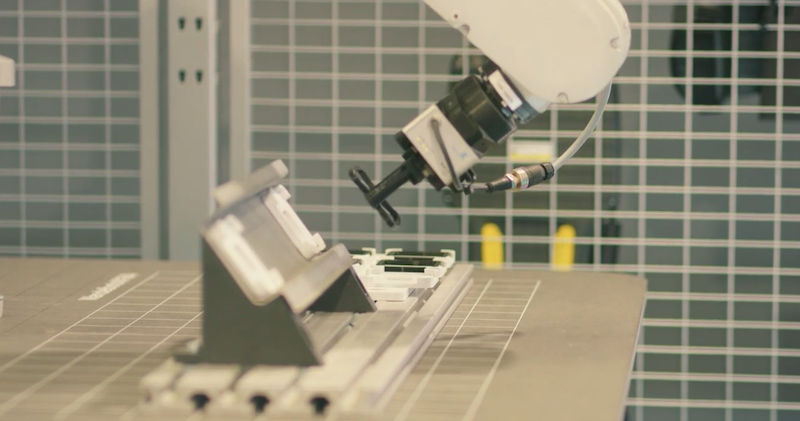

Cosmos Coverage represents an early step towards adapting world basis fashions for robotic management and planning, NVIDIA says. | Supply: NVIDIA

NVIDIA Corp. is repeatedly increasing its NVIDIA Cosmos world basis fashions, or WFMs, to deal with issues in robotics, autonomous car growth, and industrial imaginative and prescient AI. The corporate just lately launched Cosmos Coverage, its newest analysis on advancing robotic management and planning utilizing Cosmos WFMs.

Cosmos Policy is a brand new robotic management coverage that post-trains the Cosmos Predict-2 world basis mannequin for manipulation duties. It instantly encodes robotic actions and future states into the mannequin, reaching state-of-the-art (SOTA) efficiency on LIBERO and RoboCasa benchmarks, stated NVIDIA.

The corporate obtained Cosmos Coverage by fine-tuning Cosmos Predict, a WFM skilled to foretell future frames. As a substitute of introducing new architectural elements or separate motion modules, Cosmos Coverage adapts the pretrained mannequin instantly by means of a single stage of post-training on robotic demonstration knowledge.

The NVIDIA researchers defined a coverage because the system’s decision-making mind that maps observations (reminiscent of digicam photos) to bodily actions (like shifting a robotic arm) to finish duties.

What’s completely different about Cosmos Coverage?

The breakthrough of Cosmos Coverage is the way it represents knowledge, defined NVIDIA. As a substitute of constructing separate neural networks for the robotic’s notion and management, it treats robotic actions, bodily states, and success scores similar to frames in a video.

All of those are encoded as extra latent frames. These are discovered utilizing the identical diffusion course of as video era, permitting the mannequin to inherit its pre-learned understanding of physics, gravity, and the way scenes evolve over time. “Latent” refers back to the compressed, mathematical language a mannequin makes use of to know knowledge internally (slightly than uncooked pixels).

Consequently, a single mannequin can:

- Predict motion chunks to information robotic motion utilizing hand-eye coordination (i.e., visuomotor management)

- Predict future robotic observations for world modeling

- Predict anticipated returns (i.e. worth perform) for planning

All three capabilities are discovered collectively inside one unified mannequin. Cosmos Coverage may be deployed both as a direct coverage, the place solely actions are generated at inference time, or as a planning coverage, the place a number of candidate actions are evaluated by predicting their ensuing future states and values.

Extra about Cosmos Predict

Current work in robotic manipulation has more and more relied on massive pretrained backbones to enhance generalization and knowledge effectivity, NVIDIA famous. Most of those approaches construct on vision-language fashions (VLMs) skilled on large-scale picture–textual content datasets and fine-tuned to foretell robotic actions.

These fashions study to know movies and describe what they see, however they don’t discover ways to bodily carry out actions. A VLM can counsel high-level actions like “Flip left” or “Choose up the purple cup,” however it doesn’t know how you can carry them out exactly.

In distinction, WFMs are skilled to foretell how scenes evolve over time and generate temporal dynamics with movies. These capabilities are instantly related to robotic management, the place actions should account for the way the atmosphere and the robotic’s personal state change over time.

Cosmos Predict is skilled for bodily AI utilizing a diffusion goal over steady spatiotemporal latents, enabling it to mannequin advanced, high-dimensional, and multimodal distributions throughout lengthy temporal horizons.

NVIDIA stated this design makes Cosmos Predict an acceptable basis for visuomotor management:

- The mannequin already learns state transitions by means of future-frame prediction.

- Its diffusion formulation helps multimodal outputs, which is crucial for duties with a number of legitimate motion sequences.

- The transformer-based denoiser can scale to lengthy sequences and a number of modalities.

Cosmos Coverage is constructed on post-trained Cosmos Predict2 to generate robotic actions alongside future observations and worth estimates, utilizing the mannequin’s native diffusion course of. This permits the coverage to completely inherit the pretrained mannequin’s understanding of temporal construction and bodily interplay whereas remaining easy to coach and deploy.

Contained in the early outcomes

Cosmos Coverage is evaluated throughout simulation benchmarks and real-world robotic manipulation duties, evaluating towards diffusion-based insurance policies skilled from scratch, video-based robotic insurance policies, and fine-tuned vision-language-action (VLA) fashions.

Cosmos Coverage is evaluated on LIBERO and RoboCasa, two normal benchmarks for multi-task and long-horizon robotic manipulation. On LIBERO, Cosmos Coverage persistently outperforms prior diffusion insurance policies and VLA-based approaches throughout job suites, significantly on duties that require exact temporal coordination and multi-step execution.

| Mannequin | Spatial SR (%) | Object SR (%) | Purpose SR (%) | Lengthy SR (%) | Common SR (%) |

|---|---|---|---|---|---|

| Diffusion Coverage | 78.3 | 92.5 | 68.3 | 50.5 | 72.4 |

| Dita | 97.4 | 94.8 | 93.2 | 83.6 | 92.3 |

| π0 | 96.8 | 98.8 | 95.8 | 85.2 | 94.2 |

| UVA | — | — | — | 90.0 | — |

| UniVLA | 96.5 | 96.8 | 95.6 | 92.0 | 95.2 |

| π0.5 | 98.8 | 98.2 | 98.0 | 92.4 | 96.9 |

| Video Coverage | — | — | — | 94.0 | — |

| OpenVLA-OFT | 97.6 | 98.4 | 97.9 | 94.5 | 97.1 |

| CogVLA | 98.6 | 98.8 | 96.6 | 95.4 | 97.4 |

| Cosmos Coverage (NVIDIA) | 98.1 | 100.0 | 98.2 | 97.6 | 98.5 |

On RoboCasa, Cosmos Coverage can obtain larger success charges than baselines skilled from scratch, demonstrating improved generalization throughout numerous family manipulation eventualities.

| Mannequin | # Coaching Demos per Job | Common SR (%) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GR00T-N1 | 300 | 49.6 | ||||||||||

| UVA | 50 | 50.0 | ||||||||||

| DP-VLA | 3000 | 57.3 | ||||||||||

| GR00T-N1 + DreamGen | 300 (+10000 artificial) | 57.6 | ||||||||||

| GR00T-N1 + DUST | 300 | 58.5 | ||||||||||

| UWM | 1000 | 60.8 | ||||||||||

| π0 | 300 | 62.5 | ||||||||||

| GR00T-N1.5 | 300 | 64.1 | ||||||||||

| Video Coverage | 300 | 66.0 | ||||||||||

| FLARE | 300 | 66.4 | ||||||||||

| GR00T-N1.5 + HAMLET | 300 | 66.4 | ||||||||||

| Cosmos Coverage (NVIDIA) | 50 | 67.1 |

In each benchmarks, initializing from Cosmos Predict offers a big efficiency benefit over coaching equal architectures with out video pretraining, stated the NVIDIA researchers.

When deployed as a direct coverage, Cosmos Coverage already matches or exceeds state-of-the-art efficiency on most duties. When enhanced with model-based planning, the researchers stated they noticed a 12.5% larger job completion charge on common in two difficult real-world manipulation duties.

Cosmos Coverage can also be evaluated on real-world bimanual manipulation duties utilizing the ALOHA robotic platform. The coverage can efficiently execute long-horizon manipulation duties instantly from visible observations, stated NVIDIA.

The submit NVIDIA provides Cosmos Coverage to its world basis fashions appeared first on The Robotic Report.