By Livija Kasteckaitė

Industrial robotics and automation markets are rising, and that development brings denser competitors and extra fragmented purchaser journeys. The Worldwide Federation of Robotics stories 542,000 industrial robots put in in 2024, greater than double the quantity a decade earlier, with the worldwide operational inventory reaching 4,664,000 items in 2024.

On the identical time, industrial shopping for has moved decisively towards self service digital analysis. McKinsey stories rising expectations for a classy shopping for expertise and paperwork a world survey of practically 4,000 B2B resolution makers throughout 34 sectors.

Search can also be altering structurally. Google’s AI options, together with AI Overviews and AI Mode, can develop how customers uncover sources, utilizing strategies like question fan out and surfacing supporting hyperlinks. Google is express that foundational web optimization finest practices nonetheless apply and that there aren’t any particular optimizations required particularly for AI options.

In case your robotics firm shouldn’t be discoverable when engineers, integrators, and procurement groups analysis specs, integration constraints, and whole price of possession, you lose pipeline earlier than gross sales ever will get an opportunity to compete.

Why web optimization issues for industrial B2B patrons

In robotics and automation, shopping for danger is actual. A poor match can imply downtime, security publicity, rework, and delayed commissioning. That actuality shapes how engineers and resolution makers consider suppliers: they search proof {that a} resolution will work of their atmosphere, with their constraints, and inside compliance necessities.

The market backdrop intensifies this. The Worldwide Federation of Robotics highlights sustained scale in annual installations and a big put in base, which suggests extra distributors, extra integrators, and extra competing resolution approaches.

That is precisely when natural search turns into a strategic channel: it’s the most constant approach to be current throughout early analysis, shortlist formation, and technical due diligence.

Purchaser habits can also be shifting towards digital self service. McKinsey’s B2B Pulse analysis describes resolution makers balancing self service digital interfaces with human interactions and being keen to stroll away if the shopping for expertise shouldn’t be subtle.

In observe, meaning your web site is a part of the product. If it doesn’t reply technical questions clearly and rapidly, the customer will preserve looking out.

A sensible approach to see the urgency is to observe how mainstream robotics adoption is accelerating and the way new distributors enter the market. For context on how rapidly manufacturing unit robotics demand is increasing, see this Robotics and Automation Information protection of the IFR figures: World robotic demand in factories doubles over 10 years, in line with new report.

How industrial search intent differs from client intent

Client search usually resolves into a fast buy, with intent expressed by way of worth, availability, and model desire. Industrial robotics search is never that linear. Intent is often multi stage and proof pushed:

Engineers search to cut back technical uncertainty, comparable to payload, attain, repeatability, security capabilities, enclosure ranking, community protocols, or acceptable cycle time variability.

Procurement searches to validate business and operational danger, comparable to lead instances, service protection, coaching, spares technique, and whole price assumptions. System integrators seek for integration realities, comparable to supported PLC environments, ROS compatibility, imaginative and prescient stack constraints, or utility notes.

This has two web optimization implications.

First, the question set is broader than product names. You will need to win drawback and utility queries, not solely model queries. Second, the content material should be structured for resolution making: comparability readability, constraints, and validation property matter greater than persuasive copy.

Google’s steering to create useful, dependable content material constructed for folks, not for manipulating rankings, aligns effectively with this industrial intent. In case your pages exist to reply actual engineering questions higher than alternate options, you’re aligned with how fashionable rating methods are designed.

Technical web optimization points for complicated robotics product pages

Robotics websites usually behave like catalogs plus documentation hubs plus recruitment platforms plus investor relations websites. That complexity creates technical failure modes that quietly erase search visibility.

One recurring problem is duplicate and close to duplicate URLs generated by variants, filters, and parameterized navigation. Google paperwork canonical dealing with because the mechanism to consolidate duplicate URLs by signaling the consultant model, and it gives express steering on rel canonical implementation.

One other problem is crawl waste. If a website generates massive numbers of low worth variant URLs, it will possibly eat sources that must be spent discovering and refreshing key product, utility, and documentation pages. Google gives devoted steering for big websites on managing crawl price range.

In case your catalog makes use of filter based mostly navigation patterns, Google additionally publishes particular steering on managing crawling for faceted navigation URLs, together with the choice to forestall crawling by way of robots.txt when you do not need these filtered URLs listed.

Structured knowledge is one other differentiator in technical B2B contexts. Google explains that structured knowledge helps it perceive content material on a web page.

For robotics {hardware} and accent pages, product structured knowledge will help search engines like google and yahoo interpret business intent and key attributes.

The sensible rule is straightforward: mark up what you visibly current, and preserve it per the on web page content material.

Lastly, efficiency shouldn’t be beauty. Google’s Core Internet Vitals documentation describes person expertise metrics and learn how to monitor them utilizing Search Console reporting.

Sluggish documentation portals and heavy product configurators can degrade expertise and compound crawl inefficiency.

| web optimization precedence | Anticipated affect on certified visitors | Anticipated effort |

|---|---|---|

| Indexation hygiene and crawl management | Excessive, as a result of vital pages change into constantly discoverable and duplicative pages cease competing | Medium to excessive, is determined by platform complexity and URL patterns |

| Structured knowledge and web page stage semantics | Medium to excessive, as a result of search engines like google and yahoo can interpret entities and attributes extra reliably | Medium, usually engineering and web optimization coordination |

| Efficiency and web page expertise baseline | Medium, as a result of sooner pages enhance usability and help sustained crawling | Medium, usually requires improvement and infrastructure work |

Content material technique for engineering audiences

Engineering audiences reward readability, constraints, and proof. The simplest robotics web optimization content material is never generic thought management. It’s usually one of many following:

Utility pages that describe the issue, atmosphere constraints, integration necessities, and anticipated outcomes, with diagrams and implementation caveats. Documentation that’s indexable and internally linked in order that key configuration pages don’t change into orphaned.

Comparability and choice guides that cut back danger, for instance how to decide on an finish effector for a specific activity or learn how to consider security structure choices.

Google’s folks centered content material steering encourages self evaluation questions round whether or not content material demonstrates actual experience and satisfies customers.

For robotics, “experience” shouldn’t be a slogan. It appears to be like like pinout tables, security operate mappings, protocol help matrices, and deployment checklists. It additionally appears to be like like clear writer accountability, check circumstances, and versioning.

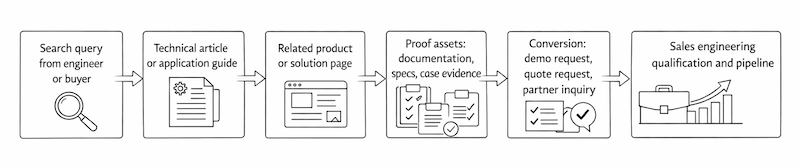

Merely publishing extra pages shouldn’t be the objective. The objective is a coherent funnel the place informational analysis content material connects logically to product choice after which to a conversion occasion.

AI and enormous language fashions in search and discovery

AI is now embedded into search experiences that form visibility and click on habits. Google’s “How AI Overviews in Search work” documentation states that AI Overviews use generative AI to offer key info with hyperlinks, and that they use a personalized Gemini mannequin working in tandem with present Search methods like rating methods and the Information Graph.

For website house owners, Google’s Search Central steering is direct: the very best practices for web optimization stay related for AI options, there aren’t any further necessities to seem in AI Overviews or AI Mode, and there aren’t any particular optimizations essential past good web optimization fundamentals.

Google additionally notes that these AI options might use a question fan out method, issuing a number of associated searches throughout subtopics, after which surfacing supporting pages.

This shifts the strategic focus for robotics manufacturers:

You want content material that may function a dependable supporting quotation for nuanced technical questions. You want robust inner linking so AI surfaced discovery can route customers to the subsequent web page that solutions the subsequent query.

AI additionally intersects with publishing workflows. Google’s steering on utilizing generative AI content material emphasizes creating content material that’s useful and, when automation is used, contemplating transparency about how content material was created in a manner that is sensible to your viewers.

For engineering readers, that may imply disclosing check setups, simulation assumptions, and what has been validated on {hardware} versus synthesized.

Measurement and KPIs for natural efficiency

Robotics firms ought to measure natural search like a pipeline enter, not like an arrogance visitors chart.

On the platform stage, Google Search Console is the first system of document for the way a website performs in Google Search outcomes. Its Efficiency report contains metrics comparable to clicks, impressions, and question stage visibility.

Google additionally states that visitors from AI options is included in general search visitors in Search Console reporting, throughout the Internet search sort.

On the enterprise stage, you wish to join natural visibility to the actions your business staff cares about:

Share of voice for top intent utility queries. Progress in non branded impressions for class and drawback phrases. Technical well being indicators that correlate with discoverability, comparable to listed protection for precedence pages and decreased duplication.

Lead high quality indicators, comparable to conversion price to demo requests and the share of conversions that meet qualification thresholds.

In case your analytics setup and CRM attribution mannequin are usually not specified, it isn’t doable to prescribe a precise KPI dashboard. Nonetheless, the very best observe is to mix Search Console visibility alerts with on website conversion monitoring so you’ll be able to establish which technical matters and resolution pages contribute to income outcomes.

Sensible first steps for robotics corporations

If you would like affect in 1 / 4, not simply in a yr, begin with a disciplined sequence.

First, set up indexation management. Affirm that essential templates will be crawled, that robots.txt shouldn’t be by chance blocking vital sources, and that you’re utilizing noindex appropriately if you actually intend to maintain pages out of search.

Google is evident that robots.txt shouldn’t be a mechanism for protecting a web page out of Google; it’s primarily for controlling crawler entry.

Submit and monitor sitemaps to assist discovery, whereas remembering {that a} sitemap is a touch, not a assure.

Second, repair duplication and parameter sprawl. Implement canonical alerts for duplicates and make deliberate choices about which filtered or parameterized URLs must be crawled or listed.

Third, publish a small set of engineering first property that map to excessive intent analysis. Choose three utility areas the place you’ll be able to credibly reveal technical management and the place your gross sales staff needs extra certified conversations. Use Google’s steering because the filter: the content material must be created to learn folks and reveal experience, to not chase rankings.

Fourth, operationalize for AI formed discovery. Since AI Overviews and AI Mode can floor a broader set of supporting hyperlinks and depend on a number of associated searches, construct inner hyperlink paths from explanatory content material to product choice content material to conversion factors.

Lastly, when you want specialised assist aligning web optimization with massive language mannequin pushed discovery and technical content material methods, work with a accomplice that explicitly focuses on this intersection, comparable to LLM SEO Agency.

Two excessive authority references price protecting open whereas executing are Google’s personal documentation on AI features and your website and McKinsey’s analysis on B2B shopping for habits in its B2B Pulse survey insights.